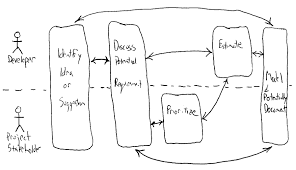

I believe, if the application under test has functionalities that was not described in requirement documents, it indicates deeper problems in software development process. All testers agree that it takes tremendous efforts to determine the unexpected behaviors of an application under test. It will take serious efforts by testers to identify any hidden functionalities which will result in losing precious time and resources.

As per my experience, if the functionality isn't necessary for the purpose of the application, it should be removed, as it may have unknown impacts or dependencies on the application which were not taken in to consideration by the stakeholders.

If alien functionality (as I call because it was not described in requirement documents) is not removed for what ever reasons it might be, it should be documented in the test plan to determine the added testing needs, resources and additional regression testing needs. Stakeholders and managements should be made aware of any significant risks that might arise as a result of this alien functionality.

On the other hand, if this alien functionality has to be included for minor improvements in the user interface and its effects on the overall applications functionality ranges from trivial to minor and does not pose a significant risks, it may be accommodated in the testing cycle with or with out minor changes in the test plan.